Migrating to Grafana Cloud

This iteration of our local Kubernetes learning environment makes the move to the cloud for one component, Grafana. Previously, we described a mostly local system that includes a very small custom NodeJS application with a lot of instrumentation around it. The setup includes Prometheus, Alertmanager, Grafana, Fluentbit, InfluxDB, and some other components like Ingress-Nginx. Now we utilize Grafana Cloud to visualize some metrics with a focus on logging. After Slack, this is the second cloud-hosted element in this setup.

The focus of the article remains on getting you some kind of “turn-key” environment where you can try things yourself. We build on what we have done before but evolve and refactor where needed.

Grafana Cloud is hosting Grafana and other services such as Prometheus or Loki, a log database. There is a reasonable-sized free tier that is fully sufficient for our learning purpose. If you want to follow this exercise practically, you will need to create a free tier account at Grafana.net.

This article is self-contained, but before getting started, it is useful to review the previous article about logging. That article covers a fully local logging setup using FluentBit, InfluxDB, and Prometheus/Grafana, all running in K3D. The addition now is a second logging pipeline from FluentBit to a remote Loki instance, a remote write of local metrics to a remote Prometheus, and a remote Grafana to visualize the data while keeping everything else in place. There are a few other changes compared to the earlier version:

- Reworked Logging in the NodeJS application: We changed the logging levels using the Winson package from the default “npm-style” to something that fits better with Grafana’s pre-configuration, inspired by their log level mapping. We also added the creation of log output for all six levels that are supported to test the color setting in Grafana.

- Simplified Application Dashboard: compared to the previous article, the

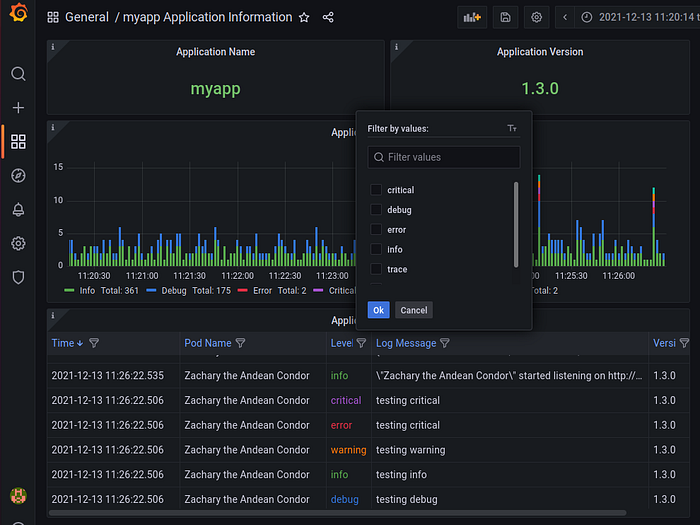

host_cpumetric is taken out and some of the static metrics have been removed, solely for the purpose of de-cluttering the custom dashboard. The new look of the dashboard in Grafana Cloud is shown in the screenshot above. - Additional scripts

grafana-cloud-deploy.shandgrafana-cloud-undeploy.shto deploy and remove the Grafana Cloud Agent, plus related additional configuration requirements inconfig.sh. The Grafana Agent takes care of scraping and remote writing metrics. - An enhanced (over-engineered?) script

app-traffic.shto generate some logs by calling the NodeJS application API.

The concept of the setup remains the same as before: it’s all local on your machine, plus additional Prometheus/Loki/Grafana instances in the cloud. The installation is driven by bash scripts, with a single command start.sh to get going. There is no persistence other than hosted services, i.e. a cluster restart starts really fresh.

Update February 13, 2022: There is a newer version of the software here with several updates.

What you need to bring

The setup is based on Docker 20.10, NodeJS 14.18, Helm 3, and K3D 5.2.1. Before getting started, you need to have the components mentioned above installed by following their standard installation procedures. The setup was tested Ubuntu 21.04, but other OSs will likely work as well. A basic understanding of bash, Docker, Kubernetes, Grafana, and NodeJS is expected. You will need a Slack account for receiving alert messages.

All source code is available at Github via https://github.com/klaushofrichter/grafana-cloud. The source code includes bash scripts and NodeJS code. As always, you should inspect code like this before you execute it on your machine, to make sure that no bad things happen.

Create a Grafana Cloud Account

As we are covering a hosted service, you need to set up an account before doing anything else… this account is different from your local Grafana installation, which we support in this setup as well.

Grafana Cloud offers hosted instances of Grafana and other components, such as Loki and Prometheus. We are going to create an account, and locally install the Grafana Agent.

To create a free account, you need to navigate to the Grafana website and “Sign up for Grafana Cloud”. There are several options to do so. You will set up an organization name in the process, which is reflected in your account URL, in my case it looks like this: https://grafana.com/orgs/klaushofrichter. There is also a subdomain for you, in my case https://klaushofrichter.grafana.net/ — this is how you access the Grafana instance that is hosted for you. In any case, please take note of the organization name, which you will need later.

Other than the organization name, we need to know one user ID for Prometheus, one user ID for Loki, a single API key that can be used for both services, and service URLs for both Prometheus and Loki. Prometheus and Loki instances are part of the free account with rate limitations, but these limits are well above of what we consume for this experimentation. We can get the details from the Grafana Cloud Portal, and access the buttons marked in yellow:

API Key: Use the button in the left sidebar called “API Keys”. A page shows up that allows you to pick a name for the key and select a permission level. Lower levels are better, for our purpose “Metrics Provider” will be the best choice. You could name the key like that “Metric Provider”, but any other name will do. Most important: copy the key and store it somewhere safe, as you may not see it again. If you lose it, generate a new key.

Loki User ID and URL: There is a Loki box on the portal, click the “Details”. Among other things, you will see a URL and a user ID (numeric). You can always come back to this page if you need these values again. There is more content on the page, but we can ignore that. There is no need to generate another API key, although you can do that and possibly have different keys for different services.

Prometheus User ID and URL: Do the same as above, but for Prometheus: Pick the “Details” button and look for the “Remote Write Endpoint” and the “User name” (numeric). Everything else can be ignored at this time.

Configuration

There are a lot of scripts in the repository. Most of them are covered in the earlier article, here we look only at the new scripts related to Grafana cloud and the configuration:

config.sh:

There are a few new items in the config file to authenticate access and navigate to your instances. Theconfig.shfile is now separated into four parts: items you have to configure, items you might want to configure, items you don’t need to configure, and some checks. There is some explanation in the file itself, but let's have a look at the mandatory part:

-SLACKWEBHOOK: this is explained in a separate article. You need to create a slack account and generate a webhook to receive alerts via Slack. You can place the URL directly into the config file, or put it elsewhere, best outside of the scope of any source control that you may use.

-GRAFANA_CLOUD_ORG: this is the name of the organization that you created when you establish a Grafana Cloud account, see the previous section.

-GRAFANA_CLOUD_METRIC_PUBLISHER_KEY: this is the API key that we created, see the previous section.

-LOKI_METRIC_PUBLISHER_USER: this is the Loki User from the Grafana organization overview, see the previous section.

-LOKI_METRICS_PUBLISHER_URL: This is the endpoint where logs are written to, taken from the Loki section discussed above.

-PROM_METRIC_PUBLISHER_USER: this is the Prometheus User from the Grafana organization overview, see the previous section.

-PROM_METRICS_PUBLISHER_URL:This is the remote-write endpoint where metrics are written to. It is shown on the Prometheus section in the Grafana Cloud Portal.

TL;DR

Once we have a Grafana Cloud account and edited config.sh, here is the quickstart:

- Clone the repository and inspect the scripts (because you don’t want to run things like this without knowing what is happening).

- Edit

config.shper instructions above. ./start.sh— this will take a while, perhaps 10 minutes or so, to set up everything.- Navigate to your Grafana Cloud account, login, and go to Dashboards/manage/import (something like

https://YOUR-ORGANIZATION.grafana.net/dashboard/import), and selectUpload JSON File. Pick the file./grafana-cloud-dashboard.jsonfrom your local directory. - You should see a screen similar to the shot on top of the article. If not, check out the Troubleshooting section at the end.

Grafana Cloud Agent to push metrics

We are using the script grafana-cloud-deploy.sh to install the Grafana Cloud Agent — this script is called by ./start.sh. There is also an uninstall script called grafana-cloud-undeploy.sh. These scripts automate the instructions given on the website.

The job of the agent is to take metrics and push them via “remote write” to the Prometheus instance hosted by Grafana. The agent is configured with a YAML file grafana-cloud.yaml.template, which is automatically using the configuration details we prepared earlier.

The scripts create a new namespace, grafana-cloud, and re-use metric sources that are installed through the kube-prometheus-stack Helm chart, see this article for details. This is configured as scrape_configs: in the file grafana-cloud.yaml.template. In addition to some standard metrics sources, we also push metrics from our own NodeJS application. But to keep in line with the free service limits, we don’t export other metrics (e.g. from FluentBit, ingress-nginx, etc).

FluentBit to push Logs to Loki

In order to push logs to Loki, we add an additional Output specification to the FluentBit configuration, see the end of the fluentbit-values.yaml.template file. This leaves the original pipeline to a local InfluxDB intact, as FluentBit can push data to multiple endpoints in parallel.

[OUTPUT]

Name loki

Match ${APP}

host ${LOKI_METRICS_PUBLISHER_URL}

port 443

tls on

tls.verify on

labels app=${APP}

http_user ${LOKI_METRICS_PUBLISHER_USER}

http_passwd ${GRAFANA_CLOUD_METRICS_PUBLISHER_PASS}The definition above uses the Loki Output plugin that comes with FluentBit. We export the NodeJS application logs that we captured already marked with the tag ${APP}. The placeholder ${APP} is substituted with the application name in package.json; unless you changed that it would be myapp. We add a label to filter the logs in Grafana, and provide the details for the Loki endpoint.

The Dashboard for Grafana Cloud / Loki

Now that we push both logs and metrics to Grafana Cloud, we can navigate to the hosted Grafana instance at https://YOUR-ORG.grafana.net. You should first go to the left sidebar and find the Dashboards menu item, and select manage. From there you can find a Dashboard called (Home) Kubernetes Integration. If things worked out, you should see a nice green acknowledgment that metrics come in — this is the Grafana Agent reading metrics from three sources and pushing them to the hosted Prometheus instance.

There are a few additional dashboards preinstalled, which you can access through the Dashboard/Manage menu. But we are interested in our own logs: When running ./start.sh we also generated a dashboard that can be uploaded to Grafana Cloud. We can do that through the Dashboard/Manage menu, but then pick “Import” and select the generated dashboard called grafana-cloud-dashboard.yaml. After the import, you may want to generate some application activity by accessing the application API, either through the browser (http://localhost:8080/service/info) or through a small script doing that for you in the terminal:

./app-traffic.sh 10 # produces 10 api calls 0.5 seconds apartor

./app-traffic.sh 100 0 1 # 100 calls, each less than 1 sec appart The Dashboard should look similar to the one at the beginning of the article, probably with fewer logs. The ./app-traffic.sh script has some instructions in the code to produce more calls.

To wrap up, here are a few comments about the dashboard itself. The dashboard shows two of the “static” pieces of information from the application (name, version), a timeline of log output, and a list of logs matching the timeline. You can filter the logs for certain levels with a drop-down selection in the top left. The timeline shows a color code that is aligned with the colors used in the log listing. If you hover over one of the bars, it shows the number of logs associated with that moment in time. Logs coming within 2 seconds are stacked on top of each other.

In the screenshot above we marked a smaller time interval to increase the resolution, show all log levels, and we have one moment where all log levels are triggered by the application. That was caused by scaling up the NodeJS application deployment (e.g. you can call ./app-scale.sh 3 to go from two instances to three — the app test-fires all log levels for testing purposes on startup).

The stacking of the log events is achieved through six parallel queries, each color-coded through “overwrites”. You can see the details when going to the timeline panels title, use the dropdown, and select edit.

The variable loglevel is defined through the dashboards settings, and the filtering is done through regular expressions in a processing pipeline. You can see the example for the “info” and debug” levels. The color-coding is on the right side, you can see four out of six overwrites. So there is a lot going on here to visualize the logs… It may not be highly efficient, but this is more about showing the principle.

Where to go from here

As always, you are invited to experiment yourself. In this article, we off-loaded log processing and metrics visualization to a hosted service, which is a good thing. But you will need to be selective with what you measure and what to log. For example, we are not having all available metrics exported, and use just a few standard metrics such as CPU utilization. Our setup measures much more, so you would need to have a close look at grafana-cloud.yaml.template where the scrapes are configured to add what you want to export in addition. If you change something, you can call ./grafana-cloud.sh to reinstall and see the results.

You can also have a look at a revised local dashboard: It uses a table instead of the logs panel and has a different filtering mechanism by using the table features. But this is what this is about: trying things and seeing what works best for a given purpose.

The local installation offers possibly more ability to customize locally running dashboards, e.g. change the timezone consistently across the board, automate deployments, and just have more metrics at hand without incurring costs.

The use of external Grafana/Prometheus allows you to undeploy the local Prometheus instance by calling ./prom-undeploy.sh, and still see logs. But many of the metrics are gone, as we reused the state-metric services that come with our Prometheus installation for Grafana Agent. To counter that, it is quite possible to install the state-metrics separately to scrape metrics, and use promtail to get logs without FluentBit/InfluxDB to make the overall local system less heavy.

In any case, good luck trying things.

Troubleshooting

Here are a few suggestions to check out if things don’t work out as described, specifically related to logs in Grafana Cloud:

- Make sure you actually generate logs… for example by calling

./app-traffic.sh 10to call the application APIs a few times. If you don’t make the API calls, you can’t see logs. - Check the local Grafana instance: without doing anything for Grafana Cloud, you should see logs on the local Grafana instance at http://localhost:8080 when navigating to the

myappdashboard. This indicates that logs are processed by FluentBit and InfluxDB. If not, check the logs of the FluentBit pods and fix this first. You can increase the log level at the top of the file, redeploy with./fluentbit-deploy.shand check the logs by getting the Fluent-Bit pod name withkubectl get pods -n fluentbitextract logs withkubectl logs YOUR-POD_NAME -n fluentbit. It should show details about the FluentBit pipeline, and other events, includinglokitraces. There may be error messages hinting at issues. You can also use Lens for more convenient access. - Check the Grafana Cloud credentials by navigating to your Grafana Cloud Billing/Usage dashboard. In the lower half, there is a panel called Logs Ingestion Rate, which should show incoming logs. You may need to zoom in a bit with the time interval set in the upper right to see samples, e.e. pick “last hour” instead of the default “this month”. If there is nothing, you may not produce logs or there are authentication issues.

- Go to settings (gearbox) on the left side, select “Data sources” and pick the Loki data source called grafanacloud-YOUR-ORG-logs. At the bottom, you can use “Test” to verify that the data source is configured. You can also use “Explore” to run test queries. Start by typing a curly brace “{“ in the query field, and it should show you labels that can be queried, in this case

app. Select that, and complete the query, e.g.{app=”myapp”}. Executing the query should show a visual similar to our own dashboard. If this does not work, check the logs for FluentBit.